FLASHATTENTION: Fast and Memory-Efficient Exact Attention with IO-Awareness (2022)

December 29, 2025Let $N$ be the sequence length. In a standard Transformer, self-attention takes $O(N^2)$ time and $O(N^2)$ memory. Approximate methods such as Reformer: The Efficient Transformer reduce FLOPs by approximating attention, but they often do not yield significant wall-clock speedups and are therefore not widely used. FLASHATTENTION instead targets memory traffic: it reduces data movement between GPU high-bandwidth memory (HBM) and on-chip SRAM, which can be a major bottleneck. HBM is much larger but has lower bandwidth than SRAM; SRAM is fast but small. FLASHATTENTION tiles the computation: it loads blocks of $\mathbf{Q}$, $\mathbf{K}$, and $\mathbf{V}$ into SRAM, updates a block of the output $\mathbf{O}$, and then writes that block back to HBM. By repeating this over all blocks, FLASHATTENTION computes exact attention.

FLASHATTENTION also reduces HBM–SRAM traffic in the backward pass. In a typical implementation, one materializes the attention score matrix $\mathbf{S}$ and the softmax output $\mathbf{P}$ so they can be reused to compute gradients of $\mathbf{Q}$, $\mathbf{K}$, $\mathbf{V}$, and $\mathbf{O}$. When $\mathbf{Q}$, $\mathbf{K}$, $\mathbf{V}\in \mathbb{R}^{N\times d}$, both $\mathbf{S}$ and $\mathbf{P}$ are $O(N^2)$ in size. FLASHATTENTION avoids storing these $N\times N$ matrices by recomputing $\mathbf{S}$ and $\mathbf{P}$ from $\mathbf{O}$ and intermediate values during backpropagation.

FLASHATTENTION uses a numerically stable row-wise softmax (subtracting the row maximum) to compute $\mathbf{O}$.

$$ \begin{align*} \mathbf{S} &= \mathbf{Q}\mathbf{K}^\top \in \mathbb{R}^{N\times N}\\\\ \mathbf{P} &= \textrm{softmax}(\mathbf{S})\in \mathbb{R}^{N\times N}\\\\ \mathbf{O} &=\mathbf{P}\mathbf{V}\in\mathbb{R}^{N\times d}\\\\ m(x) &:= \max_{{i}} x_{i}\\\\ f(x) &:= [e^{x_1-m(x)} \dots e^{x_N-m(x)}]\\\\ \ell(x) &:= \sum_i {f(x)}_i\\\\ \text{softmax}(x) &:= \frac{f(x)}{\ell(x)} \end{align*} $$Subtracting the row maximum $m(x)$ improves numerical stability. Let $d_k$ be the dimension of $\mathbf{K}$. In Attention Is All You Need, the attention logits $\mathbf{Q}\mathbf{K}^\top$ are scaled by $1/\sqrt{d_k}$ to prevent dot products from growing too large (which would make the softmax overly peaked and gradients small).

FLASHATTENTION computes the attention output $\mathbf{O}$ in blocks. The softmax for a concatenated vector, $x=[x^{(1)} x^{(2)}]$, can be decomposed into two parts:

$$ \begin{align*} m(x) &= m([x^{(1)}x^{(2)}])=\max(m(x^{(1)}), m(x^{(2)})) \\\\ f(x) &= \left[ e^{m(x^{(1)})-m(x)}f(x^{(1)})\ \ \ \ e^{m(x^{(2)})-m(x)}f(x^{(2)}) \right]\\\\ \ell(x) &= \ell(\left[x^{(1)}\ x^{(2)}\right])=e^{m(x^{(1)})-m(x)}\ell(x^{(1)})+e^{m(x^{(2)})-m(x)}\ell (x^{(2)}) \\\\ \text{softmax}(x)&=\frac{f(x)}{\eWExll(x)} \end{align*} $$FLASHATTENTION uses this decomposition to reduce I/O when transferring data between HBM and SRAM.

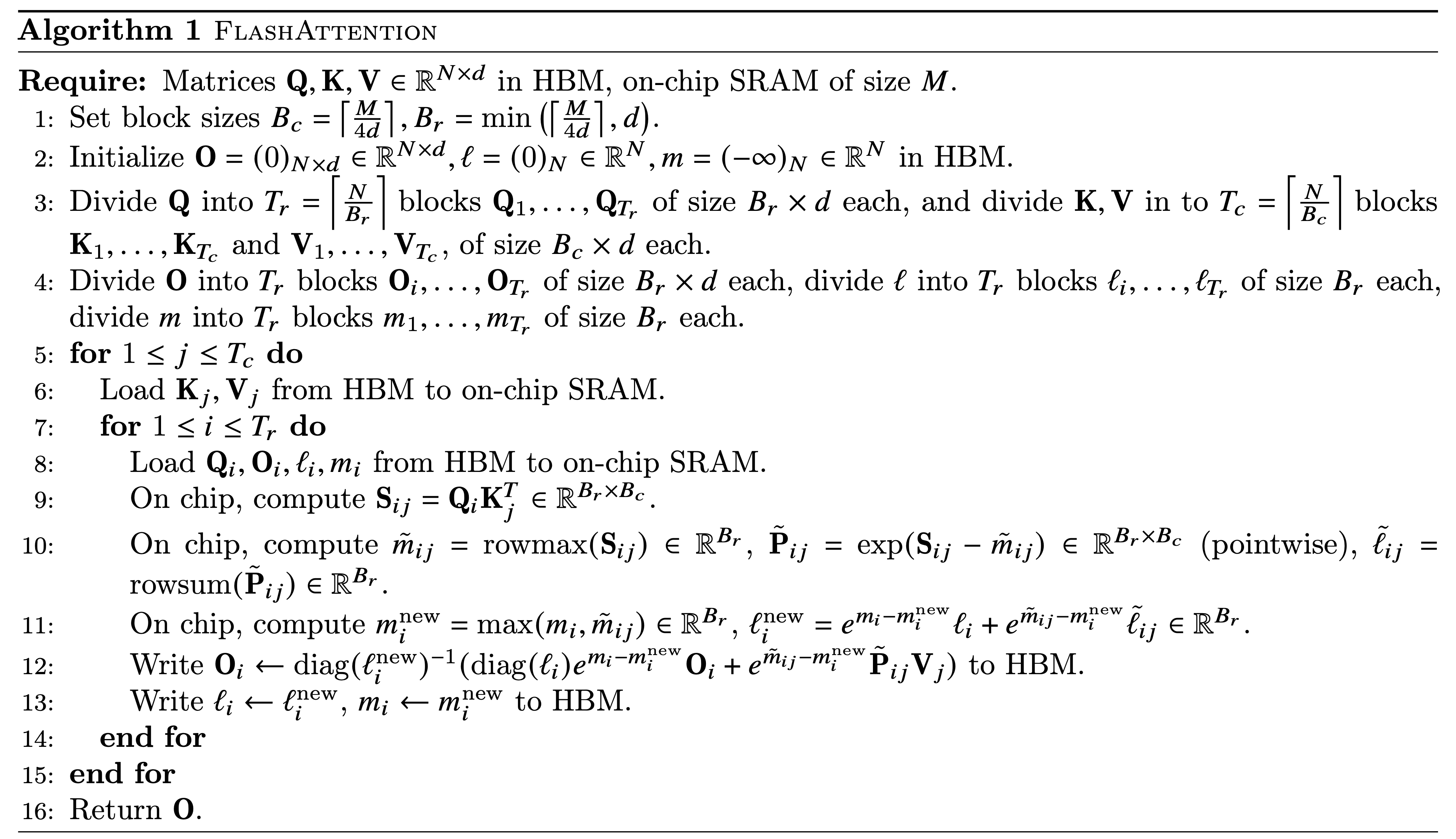

The algorithm is shown below:

The algorithm can be proved by induction. Let the first $jB_c$ blocks of $\mathbf{K}, \mathbf{V}$ be $\mathbf{K}_{:j}\in\mathbb{R}^{jB_c\times d}$ and $\mathbf{V}_{:j}\in\mathbb{R}^{jB_c\times d}$. Additionally, let $\mathbf{S}_{:,:j}$ be $\mathbf{Q}\mathbf{K}^{\top}_{:j}\in\mathbb{R}^{N\times jB_c}$, and let $\mathbf{P}_{:,:j}$ be $\text{softmax}(\mathbf{S}_{:,:j})\in\mathbb{R}^{N\times jB_c}$. The paper denotes the values of $m$, $\ell$, and $\mathbf{O}$ after the $j$-th outer loop as $m^{(j)}$, $\ell^{(j)}$, and $\mathbf{O}^{(j)}$, and proves the following by induction:

$$ \begin{align*} m^{(j)}&=\textrm{rowmax}(\mathbf{S}_{:,:j})\in\mathbb{R}^N\\\\ \ell^{(j)}&=\textrm{rowsum}(\exp(\mathbf{S}_{:,:j}-m^{(j)}))\in\mathbb{R}^N\\\\ \mathbf{O}^{(j)}&=\mathbf{P}_{:,:j}\mathbf{V}_{:j}\in\mathbb{R}^{N\times d} \end{align*} $$FLASHATTENTION computes $\mathbf{S}$ in blocks. Let the block of columns from $B_C(j-1)+1$ to $B_Cj$ be $\mathbf{S}_{:,j-1:j}\in \mathbb{R}^{N\times B_C}$; the $j$th outer loop computes $\mathbf{S}_{:,j-1:j}$. The inner loop computes blocks of size $B_r\times B_c$ within $\mathbf{S}_{:,j-1:j}$ from top to bottom. At the end of the $j$th outer loop, we have $\textrm{rowmax}(\mathbf{S}_{:,j-1:j})$.

The update in line 11 of the algorithm, together with the steps above, implies that:

$$ \begin{align*} m^{(j+1)}&=\text{max}(\textrm{rowmax}(\mathbf{S}_{:,:j}), \textrm{rowmax}(\mathbf{S}_{:,j:j+1}))\\\\ m^{(j+1)}&=\text{rowmax}(\mathbf{S}_{:,:j+1}) \end{align*} $$Under the induction hypothesis, $e^{m_i}\ell_i = \text{rowsum}(\exp(\mathbf{S}_{i,:j-1}))$ and $e^{\tilde{m}_{ij}}\tilde{\ell}_{ij} = \text{rowsum}(\exp(\mathbf{S}_{ij}))$; line 11 then implies:

$$ \ell^{(j+1)}=\text{rowsum}(\exp(\mathbf{S}_{:,:j+1}-m^{(j+1)}))\in\mathbb{R}^N $$Let $\mathbf{V}_{:j}$ be the first $B_Cj$ columns of $\mathbf{V}$, and let $\tilde{m}$ be $\textrm{rowmax}(\mathbf{S}_{:,j:j+1})$:

$$ \begin{align*} \mathbf{O}^{(j+1)} &=\textrm{diag}(\ell^{(j+1)})^{-1}(\textrm{diag}(\ell^{(j)})e^{m^{(j)}-m^{(j+1)}}\mathbf{O}^{(j)} + e^{\tilde{m}-m^{(j+1)}}\exp(\mathbf{S}_{:,j:j+1}-\tilde{m})\mathbf{V}_{j+1})\\\\ &=\textrm{diag}(\ell^{(j+1)})^{-1}(\textrm{diag}(\ell^{(j)})e^{m^{(j)}-m^{(j+1)}}\mathbf{P}_{:,:j}\mathbf{V}_{:j} + e^{-m^{(j+1)}}\exp(\mathbf{S}_{:,j:j+1})\mathbf{V}_{j+1})\\\\ &=\textrm{diag}(\ell^{(j+1)})^{-1}(\textrm{diag}(\ell^{(j)})e^{m^{(j)}-m^{(j+1)}}\textrm{diag}(\ell^{(j)})\exp (\mathbf{S}_{:,:j}-m^{(j)})\mathbf{V}_{:j}+ e^{-m^{(j+1)}}\exp(\mathbf{S}_{:,j:j+1})\mathbf{V}_{j+1})\\\\ &=\textrm{diag}(\ell^{(j+1)})^{-1}(e^{-m^{(j+1)}}\exp (\mathbf{S}_{:,:j})\mathbf{V}_{:j}+e^{-m^{(j+1)}}\exp(\mathbf{S}_{:,:j:j+1})\mathbf{V}_{j+1})\\\\ &=\textrm{diag}(\ell^{(j+1)})^{-1}(\exp(\mathbf{S}_{:,:j}-m^{(j+1)})\mathbf{V}_{:j}+\exp (\mathbf{S}_{:,j:j+1}-m^{(j+1)})\mathbf{V}_{j+1})\\\\ &=\textrm{softmax}(\mathbf{S}_{:,:j+1})\mathbf{V}_{j+1} \end{align*} $$Therefore the claim holds for $j+1$, completing the induction; FLASHATTENTION computes exact self-attention in Transformers.